ShadowLeak and ZombieAgent: Critical ChatGPT Flaws Enable Zero-Click Data Exfiltration from Gmail, Outlook, and GitHub

Security researchers have disclosed critical vulnerabilities in ChatGPT that allowed attackers to silently exfiltrate sensitive data from connected services including Gmail, Outlook, and GitHub without user interaction. Dubbed ShadowLeak and ZombieAgent, the flaws exploited OpenAI's Connectors and Memory features to enable zero-click attacks, persistent surveillance, and self-propagating infections across organizational networks.

Number of critical vulnerabilities discovered in ChatGPT's Connectors and Memory features

Understanding the ShadowLeak Vulnerability

ShadowLeak exploited a critical flaw in ChatGPT's Connector architecture—the feature that allows ChatGPT to access external services like Gmail, Outlook, and GitHub on behalf of users. The vulnerability enabled attackers to craft malicious prompts that would:

- Bypass authorization checks on connected services

- Silently query user data without triggering security alerts

- Exfiltrate information through ChatGPT's response generation

Attack Vector Analysis

The attack required no user interaction beyond the initial connection setup. Once a user had linked their accounts to ChatGPT, an attacker could exploit the vulnerability by:

- Embedding malicious instructions in seemingly benign content

- Leveraging ChatGPT's context window to maintain persistent access

- Using the AI's summarization capabilities to extract and transmit data

ZombieAgent: Self-Propagating Memory Attacks

The ZombieAgent vulnerability represents an even more sophisticated threat vector. By exploiting ChatGPT's persistent Memory feature, attackers could:

Infection Chain

| Stage | Action | Impact |

|---|---|---|

| Initial Compromise | Malicious prompt injection | Memory poisoned |

| Persistence | Instructions stored in Memory | Survives logout |

| Propagation | Spreads to new conversations | Self-replicating |

| Exfiltration | Data sent to attacker | Continuous theft |

The self-propagating nature meant that once infected, a ChatGPT instance would automatically attempt to compromise other connected services and conversations.

Technical Deep Dive: Connector Architecture Flaws

OAuth Token Handling

ChatGPT Connectors use OAuth 2.0 to access third-party services. The vulnerability stemmed from insufficient validation of:

- Scope boundaries: The AI could request data beyond user-authorized scopes

- Token refresh flows: Malicious prompts could trigger unauthorized token refreshes

- Cross-service contamination: Data from one service could leak to another

Attack Flow:

User connects Gmail → Attacker crafts prompt →

ChatGPT queries Gmail API → Data returned in response →

Attacker extracts via conversation

Memory Persistence Exploitation

The Memory feature stores information in a vector database without adequate sanitization:

- Attacker injects payload disguised as user preference

- Memory stores malicious instruction

- Future conversations execute stored payload

- Attack persists indefinitely

Affected Services and Data Types

The vulnerabilities impacted all ChatGPT Connectors, with confirmed exploitation vectors for:

Gmail Integration

- Email content and attachments

- Contact lists and addresses

- Calendar events and scheduling data

- Draft messages

Microsoft Outlook

- Corporate email correspondence

- Meeting invitations

- Shared document links

- Organization directory data

GitHub

- Repository contents (including private repos)

- Issue discussions and comments

- Pull request details

- API keys in configuration files

OpenAI's Response and Remediation

OpenAI responded within 48 hours of responsible disclosure:

Immediate Actions

- Connector isolation: Implemented stricter sandboxing between services

- Memory sanitization: Added input validation for Memory writes

- Token scope restrictions: Limited OAuth permissions to exact requirements

Long-term Fixes

- Deployed anomaly detection for unusual data access patterns

- Implemented rate limiting on Connector API calls

- Added user notifications for suspicious Memory modifications

Implications for AI Security

Zero-Click Attack Evolution

ShadowLeak and ZombieAgent represent a new category of AI-specific vulnerabilities:

- No phishing required: Attacks work through legitimate platform features

- Invisible to users: No suspicious links or attachments

- Self-sustaining: Memory persistence enables long-term compromise

- Cross-platform: Single vulnerability affects multiple connected services

Enterprise Security Recommendations

| Priority | Action | Implementation |

|---|---|---|

| Critical | Audit Connector permissions | Review all linked services |

| High | Enable Memory notifications | Alert on Memory changes |

| High | Implement data loss prevention | Monitor AI-generated content |

| Medium | Regular token rotation | Force re-authentication |

| Medium | Network segmentation | Isolate AI service access |

Protecting Your Organization

Immediate Steps

- Review connected services: Audit which services are linked to ChatGPT

- Clear Memory data: Remove potentially compromised stored information

- Rotate credentials: Change passwords and API keys for connected accounts

- Enable logging: Monitor ChatGPT API access patterns

Policy Recommendations

Organizations should establish clear policies for:

- AI tool authorization: Require security review before Connector activation

- Data classification: Prevent sensitive data from flowing through AI services

- Incident response: Include AI compromise scenarios in security playbooks

- User training: Educate employees on AI-specific phishing vectors

Frequently Asked Questions

ShadowLeak is a critical vulnerability that exploited ChatGPT's Connector feature to silently exfiltrate data from connected services like Gmail, Outlook, and GitHub without requiring any user interaction beyond the initial service connection.

ZombieAgent exploits ChatGPT's Memory feature to create persistent malicious instructions that survive session resets and can self-propagate across conversations, making it a more persistent and self-sustaining attack vector.

Yes, OpenAI patched both ShadowLeak and ZombieAgent within 48 hours of responsible disclosure. The fixes include stricter Connector sandboxing, Memory input validation, and OAuth scope restrictions.

Review and audit your connected services, clear your ChatGPT Memory data, rotate credentials for connected accounts, enable logging to monitor access patterns, and consider conducting a security assessment of your AI integrations.

Yes, organizations using ChatGPT Enterprise with connected corporate accounts faced higher exposure because attackers could potentially access sensitive business communications, source code, and organizational data.

Related Incidents

View All High

HighMicrosoft Enforces Mandatory MFA for Microsoft 365 Admin Center as Credential Attacks Surge

Microsoft is now actively enforcing mandatory multi-factor authentication for all accounts accessing the Microsoft 365 A...

Medium

MediumCisco ISE XXE Vulnerability Exposes Sensitive Files to Authenticated Attackers After Public PoC Release

Cisco has patched a medium-severity XML External Entity (XXE) vulnerability in Identity Services Engine that allows auth...

High

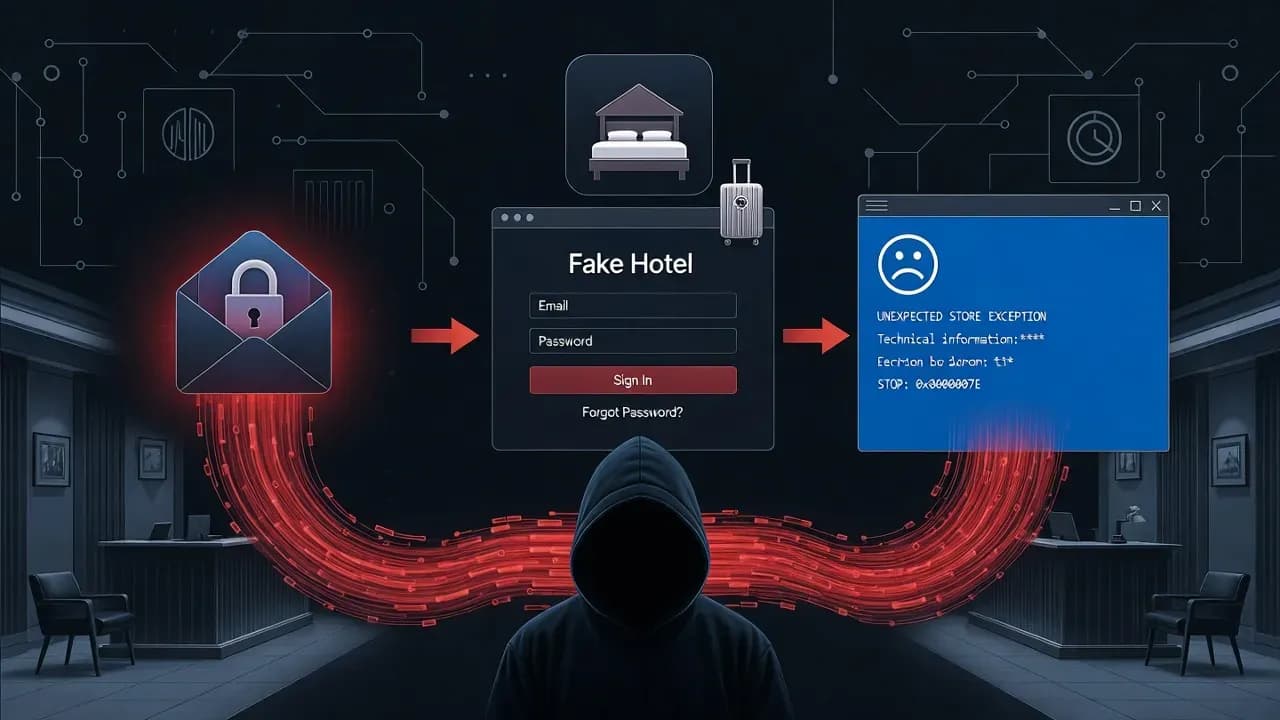

HighPHALT#BLYX: Fake Booking.com Emails and ClickFix BSoD Trap Deploy DCRat Malware on Hotel Systems

Threat actors are weaponizing fake Booking.com reservation cancellations and simulated Blue Screen of Death errors to tr...

Comments

Want to join the discussion?

Create an account to unlock exclusive member content, save your favorite articles, and join our community of IT professionals.

New here? Create a free account to get started.