Apple Confirms Google Gemini Will Power Siri, With Privacy Guardrails Remaining Central

Apple and Google have confirmed a multi-year deal to base future Apple Foundation Models on Gemini technology, powering a more personalized Siri while keeping Apple's privacy posture front and center.

Duration of the confirmed Apple-Google AI partnership

Expected rollout timeline for more personalized Siri powered by Gemini technology

The Partnership That Redefines Assistant Competition

Apple has confirmed what many in the AI ecosystem have been anticipating: future versions of Siri will be powered by models and cloud technology based on Google Gemini as part of a multi-year collaboration. The announcement matters less as a branding headline and more as a strategic admission that modern assistants are now judged by the quality of their underlying foundation model, not just by UI polish or a handful of intent handlers. For Apple, the core challenge has been closing the gap between Siri's legacy architecture and the new user expectation that an assistant can reason across context, summarize, and execute multi-step actions reliably.

At the same time, Apple is trying to win a different competition than pure capability: trust. The company is explicitly framing the Gemini partnership through the lens of privacy and control, stating that Apple Intelligence will continue to run on device and through Private Cloud Compute. The tension at the heart of this story is straightforward: Apple is outsourcing a critical layer of AI capability while attempting to preserve an identity built on minimizing data exposure. Whether that balance holds will define how users, enterprises, and regulators interpret Apple's next phase of AI.

How the Gemini Integration Works

The confirmed arrangement is not simply "Siri uses Gemini." Apple and Google describe it as the next generation of Apple Foundation Models being based on Gemini models and cloud technology, with those models helping power future Apple Intelligence features, including a more personalized Siri expected in 2026. That phrasing is important because it implies deeper integration than a single optional chatbot handoff. In practice, it suggests Apple is treating Gemini as a foundational substrate that can accelerate Siri's evolution into an assistant that can handle broader world knowledge requests, richer language understanding, and potentially more robust planning and summarization tasks.

The business logic is equally clear. Apple operates at a scale where quality failures become reputational problems, and delays translate into platform skepticism. Over the last year, Apple has been shipping Apple Intelligence capabilities while acknowledging that the Siri overhaul would take longer than planned. A partnership with Google provides a fast path to state-of-the-art model capability while Apple continues building and hardening its own approach to inference, device-side processing, and privacy-preserving cloud compute. It also reflects the reality that foundation models are becoming supply-chain infrastructure: companies either build a competitive one, or they license and adapt one from a peer with mature research and deployment pipelines.

The privacy framing rests on Apple's existing architecture: Apple Intelligence requests are first evaluated for on-device processing, and only more complex requests are routed to Private Cloud Compute, which Apple positions as an extension of device security into the cloud. For users and IT teams, the key technical question is where Gemini-derived capability runs, and under whose operational control. Apple's messaging emphasizes that the privacy model remains intact, which is a direct response to the predictable concern that "Google AI" automatically means "Google data collection." The partnership is therefore as much about governance and boundary-setting as it is about model quality.

What This Means for the AI Landscape

This deal reshapes competitive narratives in consumer AI and enterprise endpoints at the same time. For Google, being embedded into Siri and Apple Intelligence expands distribution into one of the largest device ecosystems on the planet, reinforcing Gemini as a default layer in everyday workflows. For Apple, it signals a willingness to be pragmatic: user experience and reliability now trump the optics of being fully vertically integrated at every layer of the AI stack. The market impact is not limited to product perception; it strengthens Google's position in the broader contest to become the "default model provider" across platforms, similar to how search defaults shaped a prior era.

For OpenAI and other model providers, the message is more nuanced than a simple loss or win. Apple's prior direction included an integration path that allowed Siri to route certain requests to external systems, and Apple has publicly indicated interest in multiple integrations over time. The Gemini move, however, appears to shift the center of gravity from "optional extension" to "foundation layer," which is a different tier of strategic value. A foundation role affects performance, latency expectations, safety constraints, and developer roadmaps across the OS.

Regulators will also watch this closely. Apple and Google already operate within a long-standing commercial relationship, and any deepening partnership between two platform gatekeepers tends to attract scrutiny, especially when it concerns default behaviors and user choice. Even if Apple maintains strict technical boundaries around data, the optics of consolidating core AI capability into a small number of global providers will intensify debates about competition, interoperability, and the long-term resilience of the AI supply chain.

The Real Story: Owning the Policy Layer

The most interesting part of this announcement is not that Apple "picked the best model." It is that Apple is effectively redefining what it means to own the AI experience. In the prior platform era, owning the stack meant owning the OS, the device silicon, and the core services. In the generative era, it increasingly means owning the policy layer: routing decisions, privacy enforcement, data minimization, safety constraints, and user consent flows. Apple is betting that if it owns the governance and runtime environment, it can license model capability without losing the trust differential that separates it from ad-driven ecosystems.

Private Cloud Compute becomes the linchpin. Apple has designed it to reduce the incentive and the technical ability to retain user data, while still enabling computationally heavy requests. That approach is not merely marketing; it is a product strategy that turns privacy into a system design constraint. The Gemini partnership stress-tests that constraint, because any ambiguity about where requests execute and what metadata is generated will be interpreted as a weakening of Apple's privacy posture. Apple therefore needs this integration to be operationally clean, explainable to users, and defensible to enterprise buyers who treat assistants as potential data exfiltration paths.

There is also a second-order effect: Apple is implicitly acknowledging that assistant quality now depends on fast iteration of foundation models, not annual OS release cycles. Google's AI organization can iterate model capability on a cadence that differs from Apple's platform cadence. The success of this partnership will depend on how those cadences are synchronized, how regressions are avoided, and how Apple tests and validates model behavior at scale without sacrificing time-to-market. This is where "multi-year partnership" becomes a technical risk management story, not just a procurement headline.

What Users, Enterprises, and Developers Should Expect

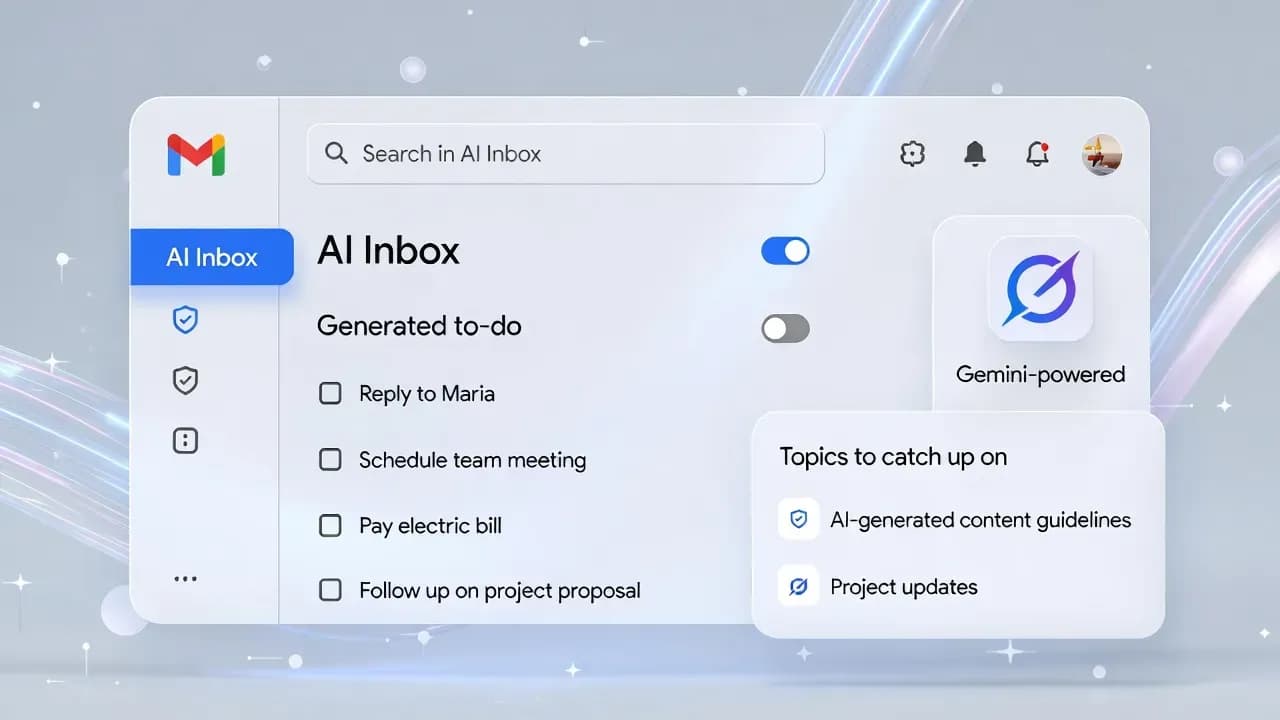

For end users, the most immediate change will likely be felt as Siri becoming less brittle: better natural language handling, stronger world knowledge responses, and improved follow-through on more complex requests. The trade-off is that users will want clarity about when a request is processed locally versus routed to cloud compute, and what controls exist to restrict that behavior. Apple's existing Apple Intelligence settings already point to a future where users can manage extensions and confirmation prompts, and the Gemini integration will put more pressure on making those controls understandable rather than buried.

For enterprises, the story is governance. IT teams should assume employees will use the assistant for work tasks the moment it becomes competent, which means data classification, DLP, and usage policy must mature ahead of adoption. The architectural promise of on-device processing and Private Cloud Compute is attractive, but it does not eliminate operational questions about logging, auditing, retention, and acceptable use. Organizations that have been cautious about sending data to third-party AI services may find Apple's model more palatable, but they will still need clear internal guidance on what content is appropriate for assistant workflows.

For developers and product teams, the bigger implication is that Siri's evolution could accelerate. If Apple delivers more reliable intent resolution and richer assistant capabilities, it may expand what developers can reasonably design around. The opportunity is better user experiences; the risk is unpredictability if assistant behavior changes across model updates. Planning for that means treating assistant interactions like an evolving dependency, with careful UX fallbacks and a realistic approach to error handling.

The Bottom Line

Apple's confirmation that Gemini-based technology will power future Siri and Apple Intelligence features is a strategic pivot that reframes the assistant race. The question is no longer whether Apple can build every component alone, but whether it can enforce a privacy-first runtime while integrating external model capability at scale. If Apple executes, users get a smarter Siri without feeling they traded away control. If it missteps, the backlash will not be about benchmarks, but about trust, transparency, and whether "privacy by design" still applies when the foundation model comes from a partner.

Comments

Want to join the discussion?

Create an account to unlock exclusive member content, save your favorite articles, and join our community of IT professionals.

New here? Create a free account to get started.